-

Target Test Prep 20% Off Flash Sale is on! Code: FLASH20

Redeem

Analyzing Your Practice Tests, Part 1

The purpose of taking a practice test is two-fold. First, you are testing yourself to see whether you have learned what you have been trying to learn. Second, you are diagnosing your strengths and weaknesses so that you can build a study plan going forward.

The purpose of taking a practice test is two-fold. First, you are testing yourself to see whether you have learned what you have been trying to learn. Second, you are diagnosing your strengths and weaknesses so that you can build a study plan going forward.

This article is an update of one originally posted about 18 months ago. Why am I posting a new version? Two reasons. One, some functionality has been added to our score reports since then, so I think that we have some better ways of interpreting the data now.Two, I think that the old method could be stripped down a bit it was a little too complicated. Though, honestly its still pretty complicated... there's a lot to learn from practice tests!

As I did last time, I'll base my discussion on the metrics that are given in ManhattanGMAT tests, but you can extrapolate to other tests that give you similar performance data (note: you need per-question timing and difficulty level in addition to percentage correct/incorrect data). It takes about 45 to 60 minutes to do this analysis, not counting any time spent analyzing individual problems.

So heres what I do when I review a students test (or tests)!

First, naturally, I look at the score. I also check whether the student did the essays (if she didn't, I assume the score is a little inflated) and I ask the student whether she used the pause button, took extra time, or did anything else that wouldn't be allowed under official testing guidelines. All of this gives me an idea of whether the students score might be a bit inflated.

Problem Lists

Next, I look at the problem lists for the quant and verbal sections; the problem lists show each question, in order as it was given to the student, as well as various data about those questions.

First, I scan down the "correct / incorrect" column to see whether the student had any strings of 4 or more answers wrong. If so, I also look at the time spent; perhaps the student was running out of time and had to rush. I also look at the difficulty levels because sometimes I'll see this: the difficulty level is high for the first problem or two, and the timing is also way too long. On the later questions, the difficulty level is lower, but the timing is also too fast. Essentially, the person had a sense that she spent too long on a couple of hard questions, so she sped up... and then she not only got the hard questions wrong but she also got the easier questions wrong because she was rushing.

Then I scan down the Cumulative Time (how much time youve spent cumulatively on the test) and Target Cumulative Time (how much time you should have spent cumulatively) columns. I specifically look for periods when the student is more than 2 minutes off of the Target time. When I see that the student is too fast or too slow, I try to figure out what happened where was the student spending too much time or rushing? What happened on those problems?

Then I scan down the Time column, which lists the time spent per question. Even if the student managed to stay on time cumulatively, the student still might exhibit what I call up and down timing spending too long on some and then rushing on others to catch up. Even if you finish the section on time, you still might have a timing imbalance.

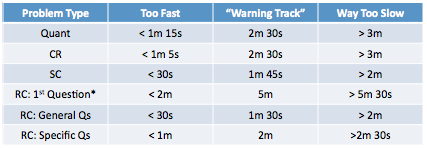

Here are the timing metrics by problem type:

*The first RC question includes the time it takes to to read the passage.

Again, Im looking for patterns. How many times did the student hit the warning track or spend way too much time, and how many times did the student move too quickly? What was the cumulative outcome of these statistics?

If there are more than a few (regardless of whether theyre right or wrong), then the student has a timing problem. For example, if the student had 4 questions over 3m each, then I can guarantee you that the student missed other questions elsewhere simply due to speed that extra time had to come from somewhere. You know those times when you realize you made an error on something that you knew how to do? Well, if you were also moving quickly on that problem, your timing was at least partially a cause of that error.

Alternatively, if there is even one that is very far over the way too slow mark, theres a timing problem. If you have one quant question on which you spent 4m30s, you might let yourself do this on more questions on the real test and there goes your score. (By the way, the only potentially acceptable reason is: I was at the end of the section and knew I had extra time, so I used it. And my next question would be: why did you have so much extra time?)

For each section, I get a general sense of whether there is

- not a timing problem (e.g., only 1 or 2 questions in the too fast or warning track range)

- a small timing problem (e.g., 3 questions in the warning track range, or 1 problem in the way too slow category, plus a few way too fast incorrect questions), or

- a large timing problem (e.g., 4+ questions in the warning track range, or 2+ questions that are way too slow, plus multiple way too fast incorrect questions).

Note that I dont specify above whether the warning track and too slow questions were answered correctly or incorrectly that doesnt matter. It isnt (necessarily) okay to spend too much time on a question just because that question was answered correctly.

If a timing problem seems to exist, I try to figure out roughly how bad the problem is. How many problems fit into the different categories? Approximately how much time total was spent on the way too slow problems? How many too fast questions did that cost the student? You may also want to examine the problems themselves to locate careless errors. How many of your careless errors occurred on problems when you were rushing?

Also, be flexible with the assessment. For instance, if you answered a quant question incorrectly in 45 seconds, but you knew that you had no idea how to do the question, so you chose to guess and move on that was a good decision. You dont need to count that against you in your analysis.

Finally, I see whether there are any patterns in terms of the content area (for example, perhaps 80% of the "too slow" quant problems were problem solving problems or two of the "too slow" SC problems were modifier problems). Were going to run the assessment reports next to dive deep into this content data, but do try to get a high level sense of any obvious patterns.

All of the above allows you to quantify just how bad any timing problems are. Seeing the data can help you start to get over that mental hurdle ("I can get this right if I just spend some more time!") and start balancing your time better. Plus, the stats on question type and content area will help youto be more aware of where you tend to get sucked in half the battle is being aware of when and where you tend to spend too much time.

If you do have timing problems, this article on time management should be useful.

Now were done looking at the problem lists; in the next article, well analyze the data given in the assessment reports.

Recent Articles

Archive

- April 2024

- March 2024

- February 2024

- January 2024

- December 2023

- November 2023

- October 2023

- September 2023

- July 2023

- June 2023

- May 2023

- April 2023

- March 2023

- February 2023

- January 2023

- December 2022

- November 2022

- October 2022

- September 2022

- August 2022

- July 2022

- June 2022

- May 2022

- April 2022

- March 2022

- February 2022

- January 2022

- December 2021

- November 2021

- October 2021

- September 2021

- August 2021

- July 2021

- June 2021

- May 2021

- April 2021

- March 2021

- February 2021

- January 2021

- December 2020

- November 2020

- October 2020

- September 2020

- August 2020

- July 2020

- June 2020

- May 2020

- April 2020

- March 2020

- February 2020

- January 2020

- December 2019

- November 2019

- October 2019

- September 2019

- August 2019

- July 2019

- June 2019

- May 2019

- April 2019

- March 2019

- February 2019

- January 2019

- December 2018

- November 2018

- October 2018

- September 2018

- August 2018

- July 2018

- June 2018

- May 2018

- April 2018

- March 2018

- February 2018

- January 2018

- December 2017

- November 2017

- October 2017

- September 2017

- August 2017

- July 2017

- June 2017

- May 2017

- April 2017

- March 2017

- February 2017

- January 2017

- December 2016

- November 2016

- October 2016

- September 2016

- August 2016

- July 2016

- June 2016

- May 2016

- April 2016

- March 2016

- February 2016

- January 2016

- December 2015

- November 2015

- October 2015

- September 2015

- August 2015

- July 2015

- June 2015

- May 2015

- April 2015

- March 2015

- February 2015

- January 2015

- December 2014

- November 2014

- October 2014

- September 2014

- August 2014

- July 2014

- June 2014

- May 2014

- April 2014

- March 2014

- February 2014

- January 2014

- December 2013

- November 2013

- October 2013

- September 2013

- August 2013

- July 2013

- June 2013

- May 2013

- April 2013

- March 2013

- February 2013

- January 2013

- December 2012

- November 2012

- October 2012

- September 2012

- August 2012

- July 2012

- June 2012

- May 2012

- April 2012

- March 2012

- February 2012

- January 2012

- December 2011

- November 2011

- October 2011

- September 2011

- August 2011

- July 2011

- June 2011

- May 2011

- April 2011

- March 2011

- February 2011

- January 2011

- December 2010

- November 2010

- October 2010

- September 2010

- August 2010

- July 2010

- June 2010

- May 2010

- April 2010

- March 2010

- February 2010

- January 2010

- December 2009

- November 2009

- October 2009

- September 2009

- August 2009